- #How to install spark in windows how to#

- #How to install spark in windows update#

- #How to install spark in windows code#

- #How to install spark in windows zip#

- #How to install spark in windows download#

After this, you can find a Spark tar file in the Downloads folder.

#How to install spark in windows download#

Step 4 – (Optional) Java JDK installation. After finishing with the installation of Java and Scala, now, in this step, you need to download the latest version of Spark by using the following command: spark-1.3.1-bin-hadoop2.6 version.

#How to install spark in windows update#

sudo add-apt-repository ppa:webupd8team/jav sudo apt update sudo apt install oracle-java8-installer. This is a bit out the scope of this note, but Let me cover few things. Step 3 – Install Hadoop native IO binary. First, you must have R and java installed. tar.gz file is available in the Downloads folder.

Step 8: Click on the link marked and Apache spark would be downloaded in your system. Now we will start the installation process. Step 7: Select the appropriate version according to your Hadoop version and click on the link marked.

#How to install spark in windows zip#

spark download options Setting Up Spark On Windows Once your download is complete, it will be zip file. You can choose which spark version you need and which type of pre-built Hadoop version it comes with. Install New -> Maven -> Coordinates -> :spark-nlp2.12:3.4. Download Spark Now we can download spark from apache spark website. Install New -> PyPI -> spark-nlp-> Install 3.2. NET for Apache Spark applications on Windows. In Libraries tab inside your cluster you need to follow these steps.

#How to install spark in windows how to#

#How to install spark in windows code#

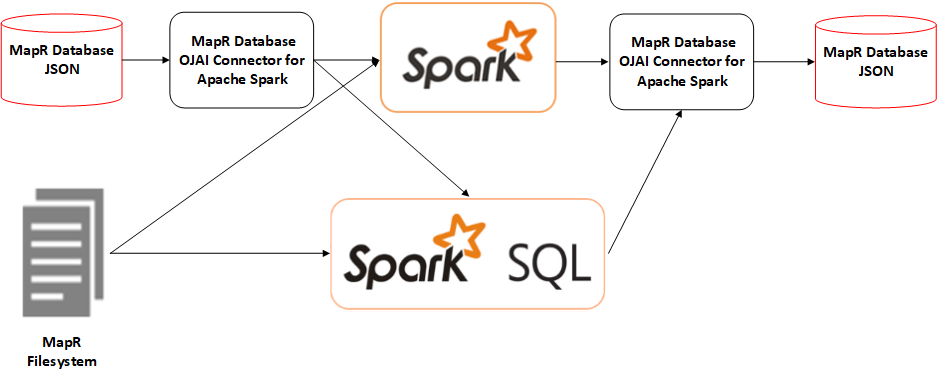

You can also run Spark code on Jupyter with Python on your desktop. It is also handy for debugging if you can just run it on your local machine. First, you will see how to download the latest release. The installation of Apache Spark is quite simple and easier than you might think. Make sure that the folder path and the folder name containing Spark files do not contain any spaces. This video on Spark installation will let you learn how to install and setup Apache Spark on Windows. Learning Apache Spark is easy whether you come from a Java, Scala, Python, R, or SQL background. Problem Formulation: Given a P圜harm project. Extract the files from the downloaded tar file in any folder of your choice using the 7Zip tool/ other tools to unarchive. The Spark framework is a distributed engine for set computations on large-scale data facilitating distributed data analytics and machine learning. When I develop with Spark, I typically write code on my local machine with a small dataset before testing in on a cluster. f) For installing Apache Spark, you don’t have to run any installer. You can simply install it on your machine. install pyspark on windows 10, install spark on windows 10, apache spark download, pyspark tutorial, install spark and pyspark on windows, download winutils. This way, you will be able to download and use multiple Spark versions. Select the latest Spark release, a prebuilt package for Hadoop, and download it directly. To play with Spark, you do not need to have a cluster of computers. Does Pyspark install spark Install pySpark To install Spark, make sure you have Java 8 or higher installed on your computer. However, as Spark goes through more releases, I think the machine learning library will mature given its popularity. In terms of machine learning, I found the performance and development experience of MLlib (Spark’s machine learning library) is very good, but the methods you can choose are limiting. For most of the Big Data use case, you can use other supported languages. If you have a large binary data streaming into your Hadoop cluster, writing code in Scala might be the best option because it has the native library to process binary data. For example, you can write Spark on the Hadoop clusters to do transformation and machine learning easily.

Spark is easy to use and comparably faster than MapReduce. It also has multi-language support with Python, Java and R. Apache Spark is a powerful framework to utilise cluster-computing for data procession, streaming and machine learning.

0 kommentar(er)

0 kommentar(er)